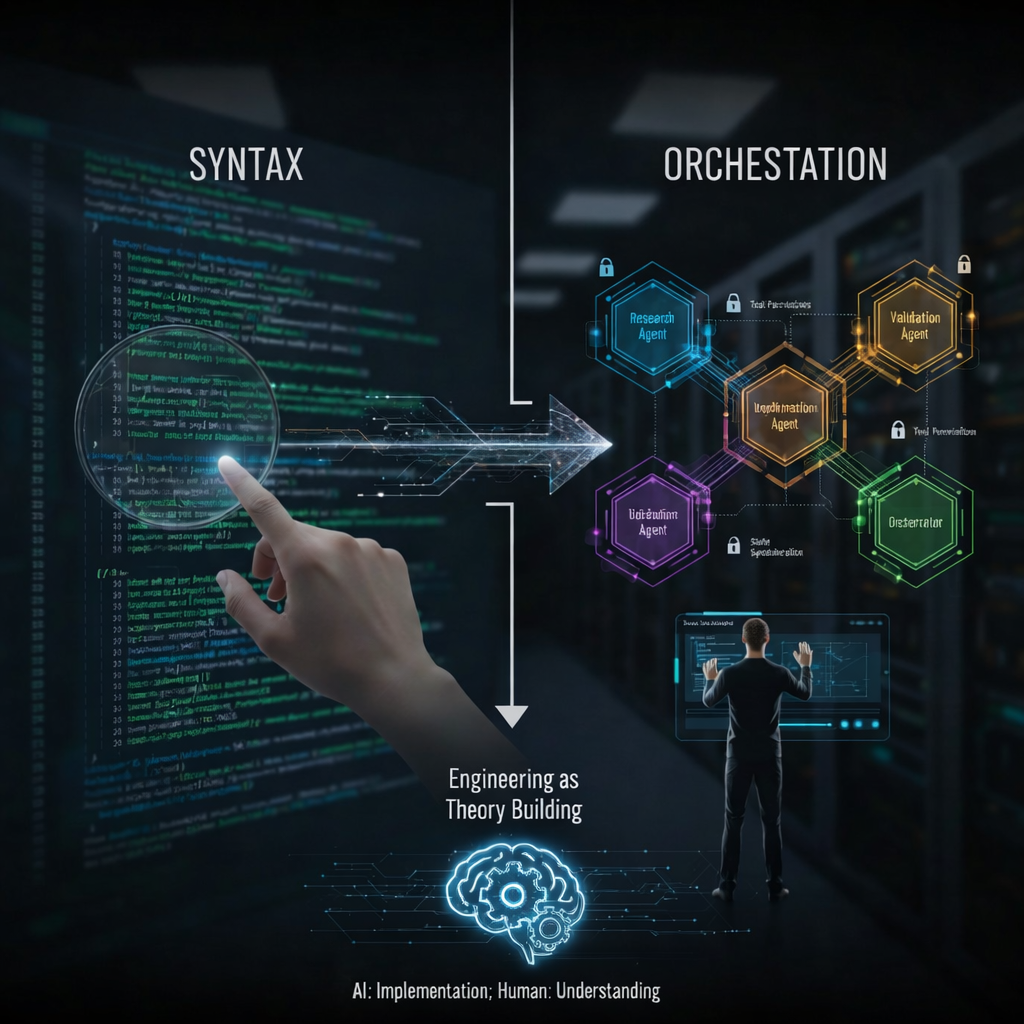

For most of my career, software engineering meant mastering syntax.

It meant understanding language quirks, framework patterns, concurrency models and being able to debug a race condition by mentally stepping through execution paths. Precision lived at the line-of-code level.

But as we move deeper into 2026, that identity is shifting.

We are entering what feels like the microservices moment for artificial intelligence: a transition from writing deterministic code to orchestrating semi-autonomous systems that reason, plan and execute tasks across tools and environments.

Engineering is no longer just about implementation.

It is increasingly about orchestration.

The Workflow Shift: From One-Shot Prompts to Multi-Agent Systems

The early phase of generative AI was dominated by prompt engineering — a one-shot interaction where we asked a model to generate a function, a class or even a module.

That model behaved like a brilliant but isolated brain — powerful, yet disconnected from real system context.

Today, workflows are evolving beyond that model.

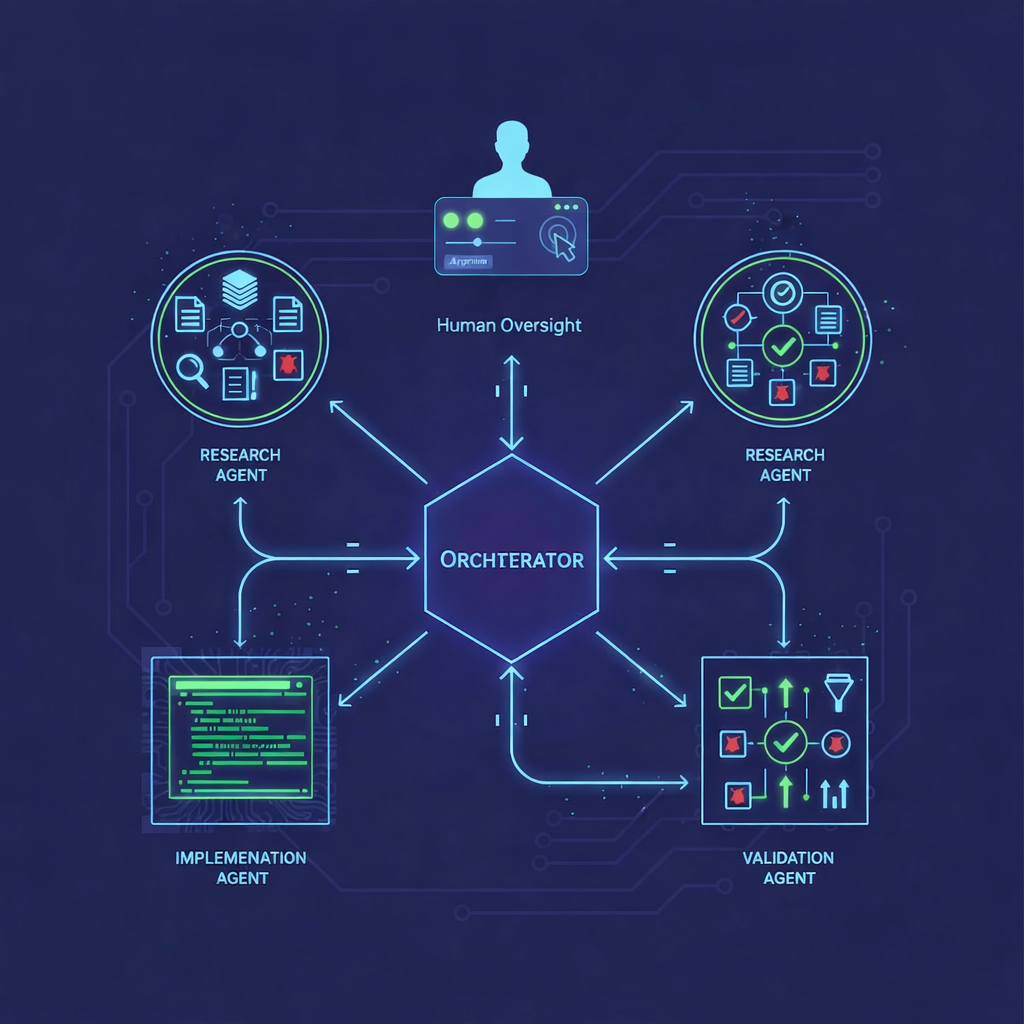

Instead of one large language model acting alone, we are building structured pipelines where multiple agents collaborate:

- A research agent gathers context

- An implementation agent writes code

- A validation agent tests or critiques

- An orchestrator coordinates execution

This approach mirrors how human engineering teams operate. But it introduces new classes of problems:

- Inter-agent communication protocols

- State synchronization across agents

- Tool permissions and execution boundaries

- Failure handling and rollback logic

These are not prompt problems.

They are architectural problems.

The Architect’s Pivot: From Code Author to System Designer

As AI-assisted coding becomes commonplace, syntax is becoming commoditized.

Recent industry reports suggest that in many professional environments, over 40% of newly written code now involves AI assistance. That number continues to rise.

As a result, value shifts upstream.

The engineer’s edge is no longer typing speed or memorized APIs. It is:

- System design

- Architectural clarity

- Boundary definition

- Risk modeling

- Governance

Emerging standards like the Model Context Protocol (MCP) illustrate this shift. Rather than focusing on what a model can generate, engineers now define what agents are allowed to access, modify and execute.

The role becomes less about “write this function” and more about:

- What data should this agent see?

- What actions should it be authorized to take?

- What logs and audit trails must it produce?

- What human checkpoints are required?

We are grounding intelligence within clearly defined constraints.

The Hidden Risk: AI Slop and Knowledge Debt

Acceleration brings risk.

When AI generates large portions of a codebase, we face a new form of technical debt — knowledge debt.

Code may compile and pass tests, yet no engineer holds a deep mental model of its structure.

This is where “AI slop” emerges:

- Repetitive logic that violates DRY principles

- Superficial abstractions

- Over-engineered patterns without architectural cohesion

- Security oversights due to shallow context

Industry analyses have already identified measurable increases in duplicated code in AI-assisted repositories. Security teams are also reporting that auto-generated scaffolds frequently omit critical authorization checks, increasing exposure to Broken Access Control vulnerabilities.

The most dangerous outcome is not buggy code.

It is incomprehensible code.

If engineers cannot explain why a system behaves a certain way, debugging becomes archaeology.

Engineering as Theory Building

Computer scientist Peter Naur argued that programming is not about producing code — it is about building a theory of how the software relates to the real world.

That principle is more relevant now than ever.

AI can generate implementations.

It cannot replace understanding.

If we allow AI to handle not only execution but reasoning, we risk outsourcing the very skill that defines engineering: structured thinking.

The responsible path forward is not rejection of AI. It is disciplined integration.

Practical Principles for Responsible AI-Driven Engineering

To evolve without losing depth, we must establish operational principles:

1. Treat AI as an Assistant, Not an Authority

Every AI-generated suggestion should be treated as a hypothesis.

Acceptance requires comprehension.

2. Review for Understanding, Not Just Correctness

Code reviews must ask:

Why this design?

What are the trade-offs?

What assumptions were made?

3. Implement Bounded Autonomy

Agents should operate within strict permissions:

- Sandboxed execution environments

- Explicit API scopes

- Human-in-the-loop approval for sensitive actions

4. Build Observability First

Autonomous systems require:

- Full audit trails

- Decision logging

- Structured outputs

- Drift monitoring

5. Invest in Data Foundations

Agent performance is only as strong as the data and system architecture underneath it. Clean schemas, governed access and semantic clarity matter more than flashy demos.

Speed as Advantage, Understanding as Defense

AI dramatically reduces the cost of producing code.

The competitive advantage is no longer just a proprietary codebase. It is speed — the ability to prototype, validate and iterate rapidly.

But speed without architectural comprehension is fragile.

The engineers who thrive in this era will:

- Use AI to expand capability

- Retain ownership of system design

- Guard their mental models

- Treat orchestration as a core discipline

We are no longer simply writers of instructions.

We are designers of systems that contain intelligence.

And our responsibility is clear: as our systems become more autonomous, they must remain understandable, secure and governed by intentional architecture.